3.2 BPaaS Monitoring Engine

3.2 BPaaS Monitoring Engine

3.2.a Distributed and self-scalable Monitoring Engine

Provide self-scaling Monitoring Architecture with a flexible TSDB storage engine and customisable sensors.

Information

BPaaS Allocation and Execution Environment BluePRintsSpringer Publication

Extend

Axe AggregatorAdditional Information

Originator: Frank Griesinger (UULM)Technology Readiness Level: 2

Related CloudSocket Environment: Execution

3.2.b Cross-Layer Monitoring Engine

Information

Description: This research prototype describes how a BPaaS can be monitored across all the operational layers: workflow, service, infrastructure.

Research Question

How a BPaaS can be monitored across all the operational layers, in order to gain a clear view about its performance?

What metrics can be defined in each layer and how we model their dependencies?

Added Value: The broker gains a clear view of the BPaaS performance and the constituent components across all the operational layers.

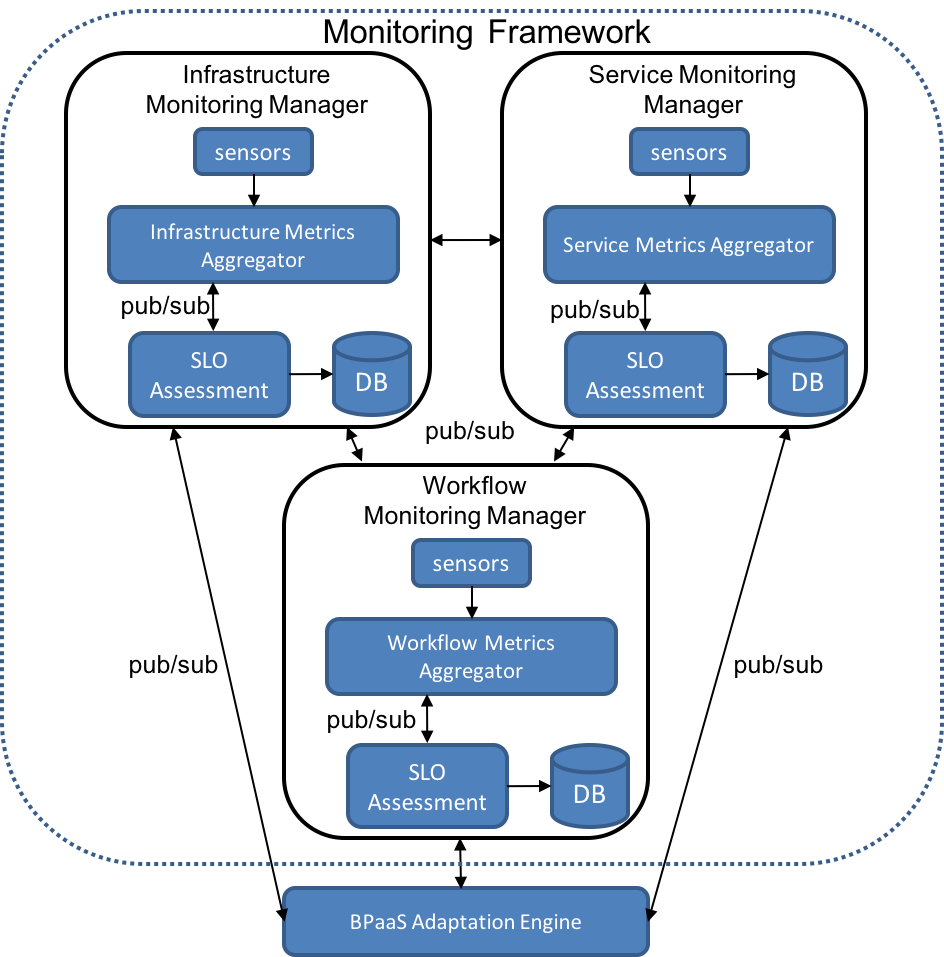

Architecture

Monitoring sensors: provides measurements for specific metrics at each operational layer

Metric aggregator: collects monitoring data for each layer

SLO Assessment component: Assesses the monitoring data to detect violations

Pub/sub: a publish/subscribe mechanism that enables the transferring of measurements among the monitoring managers and the adaptation engine.

Demo (video)

Input: An InvoiceNinja application workflow deployed locally (Create client, Create invoice and Create payment sequential tasks). The IP of the application and a token to provide access to it, are required.

Story: This demo shows the monitoring at the workflow and service layers. Monitoring at the infrastructure layer is provided by UULM and we are working in providing a combined cross-layer monitoring framework.

Initially the workflow monitoring sensor (java-based class) is deployed and periodically makes GET calls through the InvoiceNinja API to collect information about the number of clients, invoices and payments.

Next, we use Activiti workflow engine (Eclipse plugin) to run a simple workflow for creating a client, an invoice and a payment and monitor the execution time for each one of the corresponding services, as well as of the whole process.

Output: Measurements for 7 metrics at the workflow (Number of client, invoices, payments) and service layers (execution time of client creation, invoice creation, payment creation, total execution time). The measurements are stored and can be visualized through the KairosDB Web UI.

UI: Activiti workflow Engine (Eclipse plugin), KairosDB TSDB Web UI

Information

Closer slides setWebinar

Poster

ESOCC Paper: Cross Layer Monitoring

Peace 2016 publication on distributed layer monitoring

CloudSocket slide set on cross layer monitoring

Extend

GitHub Infrastructure LayerGitHub Service Layer Aggregator

Scallibility Elasticity Aggregator

GitHub fortress WebServices

GitHub Activity Workflow Engine

GitHub pubSubSystem

Additional Information

Originator: Kyriakos Kritikos (FORTH)Technology Readiness Level: 4

Related CloudSocket Environment: Execution

3.2.c Synergic Cross-Layer Monitoring Framework

Research Problem

Various monitoring frameworks have been proposed and offered that usually cover only one or two levels of abstraction. Moreover, such frameworks usually rely on level-independent mechanisms which are able to support the individual assessment over the respective metrics. The latter leads to the issue of measurability gaps: as adjacent levels are not able to cooperate, then it is not possible to produce measurements of a high-level metric from measurements of a lower-level metric.

This issue leads to the fact that most of the monitoring frameworks are able to support a rather limited and fixed set of metrics. Related to this issue is the problem of metric computability & specification flexibility: in particular, the frameworks are not able, based on the high-level but complete description of a metric, which might also evolve, to produce its measurements. They rather stay on a quite technical mapping of the fixed description of the limited set of metrics supported to the corresponding sensing or aggregation code needed for the metric measurement.

The above inabilities of the current monitoring frameworks highlight the need for introducing a cross-layer monitoring framework which is able to measure metrics over different levels as well as cover measurability gaps by being able to address the measurement of any kind of metric. Such a framework should also rely on a flexible and complete high-level description of a metric which is independent from any technical specificities.

Solution

FORTH and University of ULM (UULM) have developed two separate monitoring frameworks that cover different levels of abstraction. The monitoring framework of FORTH covers mainly the workflow and service level, while the monitoring framework of UULM covers mainly the infrastructure and platform levels. As such, it is apparent that the integration of these frameworks can result in the production of a synergic framework able to cover the measurement of metrics at all possible levels. To this end, such a synergic framework has been designed and is currently developed, able to capitalize over the individual capabilities of the aforementioned monitoring frameworks. Each individual framework is seen as a service which offers monitoring and (SLO) evaluation capabilities. The cross-layer aggregation of metric measurements between the two individual frameworks is facilitated by a publish-subscribe mechanism: this means that one framework subscribes to those lower-level measurements that are needed in order to aggregate a measurement for a higher-level under its responsibility. The combined framework also offers another publish-subscribe mechanism to enable interested components, like an Adaptation Engine, to retrieve the SLO evaluations produced and enact, in this case, respective adaptation actions.

Other interesting features of the combined framework which map to common features of the individual frameworks are the following: recovery capability in case a monitoring component fails, data redundancy to avoid important monitoring data loss as well as capability to monitor both domain-independent and domain-specific metrics.

Architecture

The architecture of the combined/synergic monitoring framework can be seen in the following figure. It includes the respective individual frameworks which communicate via a publish-subscribe mechanism to cover the measurability gaps across levels. In addition, this mechanism is used in order to propagate measurements to the Evaluator component which includes a CEP engine responsible for evaluating conditions over the propagated measurements.

The events produced are further propagated to interested components, such as Adaptation Engines. The measurements can also be inspected in a programmatic or UI-based manner by two other components which belong in the Collosseum cloud orchestration engine: the Collosseum API enables the programmatic retrieval of measurements that are stored in the Evaluator, while the Collosseum UI enables the production of graphs over these measurements. Both components can rely on the measurements propagated by the individual monitoring frameworks but, in principle, they could also require the further aggregation of the stored measurements which is natively supported by the encompassing CEP Engine.

This innovation item will be made available at Gitlab.

Information

BPaaS Allocation and Execution Environment BluePrintBPaaS Allocation and Exexution Environment Prototypes

CloudSocket Wiki on Research Problem

CloudSocket Wiki on Tool

CloudSocket Wiki on Solution

CloudSocket Wiki on Architecture

Use

https://omi-gitlab.e-technik.uni-ulm.de/cloudsocket/cross_layer_monitoringExtend

GitHub Cross Layer MonitorAdditional Information

Originator: Chris Zegiris (FORTH)Technology Readiness Level: 5

Related CloudSocket Environment: Execution

Additional Information

Originator: Kyriakos Kritikos (FORTH)Technology Readiness Level:

Related CloudSocket Environment: Execution