Smart Service Discovery and Composition Blueprint

Research Problem

The Smart Service Discovery and Composition tool enables the automation of BPaaS allocation which is currently manually performed by the BPaaS broker. The BPaaS broker is currently faced with a two faced problem: (a) how to discover those services which are able to either realise the functionality of the BPaaS workflow service tasks or support the execution of the internal software components of these tasks; (b) how to select the best alternative from the service candidates of each task such that the user requirements at both the global and local level are optimised.

Concerning the first problem, the current state-of-the-art focuses on providing solutions on just one aspect, either the functional or the non-functional one. In many cases, the respective techniques do not exploit the service semantics. In addition, the alignment of QoS terms is usually not considered in non-functional service matchmaking. These two issues lead to low precision and recall in the service discovery process. Moreover, the execution time and the scalability of the respective discovery algorithms are usually not satisfactory due to the non-exploitation of smart techniques which attempt to cleverly organise the service advertisement space.

As far as the second problem is concerned, we need first to explicate that the service selection problem in BPaaS allocation is usually harder with respect to the selection of SaaS or IaaS services. This is due to the fact that both IaaS and SaaS selection are inter-dependent on each other. This means that the selection of an IaaS service can have an effect on the QoS at the SaaS level which can then influence the service selection at this latter level. As such, there is a need for an approach which is able to select both IaaS and SaaS services in conjunction by still taking into account all types of user requirements. Such an approach should also cater for the case where there are choices to be made even for the same service task in terms of selecting either an external SaaS to realise its functionality or an IaaS to support the execution of an internal software component (either owned or outsourced by the broker) that realises the functionality of this task.

Secondly, by focusing on individual level service selection, the proposed approaches usually exhibit various issues which include the following: (a) the dependencies between the different QoS terms are not considered; (b) pessimistic or average approach over service graph and available solution space is employed; (c) just a single value and not ranges of QoS values are considered to cater for the suitable service description in dynamic environments; (d) no solution is returned for over-constrained user requirements.

Solution Approach

The Smart Service Discovery and Composition tool enables both the semantic discovery of cloud services at different levels of abstraction as well as the concurrent selection of both SaaS & IaaS services for service-based workflow concretisation according to the broker functional and non-functional requirements.

The tool actually comprises two main modules dedicated to cloud service discovery and selection, respectively. As such, the respective feature presentation is done per each main module of this tool.

Concerning service discovery, the main features of the blueprint can be summarised as follows:

- Supports both functional and non-functional semantic service discovery to cover all possible aspects in service description. The functional aspect is covered by OWL-S while the non-functional one by OWL-Q. - Smart service discovery is offered which enables boosting the service matchmaking time. Smartness is realised at two levels: (a) the transactional combination of aspect-specific matchmakers according to different composition semantics (currently parallel composition maps to the fastest implementation); (b) at the individual, aspect-specific level, each service matchmaker utilises smart structures in order to better organise the service advertisement space and accelerate the service matchmaking. - The tool enables not only the matchmaking but also the management (update, insertion, deletion) of the functional and non-functional service specifications. - A Java and REST API are offered to enable the service discovery and service specification management.

As far as service selection is concerned, the respective module exhibits the following features:

- concurrent selection of both IaaS and SaaS services to deliver purely optimal selection solutions for the service-based BPaaS workflow. - catering also for different realisation alternatives for a SaaS service: (a) either an external SaaS service can be exploited or (b) an internal one which also requires being hosted in a public or private cloud. In the latter case, the internal service code would be either purchased or has been developed internally by the broker organisation. - consideration of a great variety of non-functional requirements, including performance and reliability ones (over, e.g., response time, throughput, availability and reliability), security ones at both a coarse (in terms of security controls) and a fine-grained level (in terms of security SLOs) and cost. - capability to map low-level non-functional capabilities to higher-level ones in the form of dependency functions to cover any dependency gap within the respective optimisation problem. - capability to handle both linear and non-linear constraints as well as both real and integer-based variables (mapping to the coverage of the previous functions as well as aggregation ones over non-functional metrics (from component/service to application/workflow level)). - in case that a specific time deadline has to be provided, the respective solver can be configured to take it into consideration, thus being able to more rapidly produce solutions with a potential penalty over solution optimality. - parts of the problem can be fixed according to knowledge derived by a Knowledge Base by applying rules over the BPaaS execution history.

Architecture

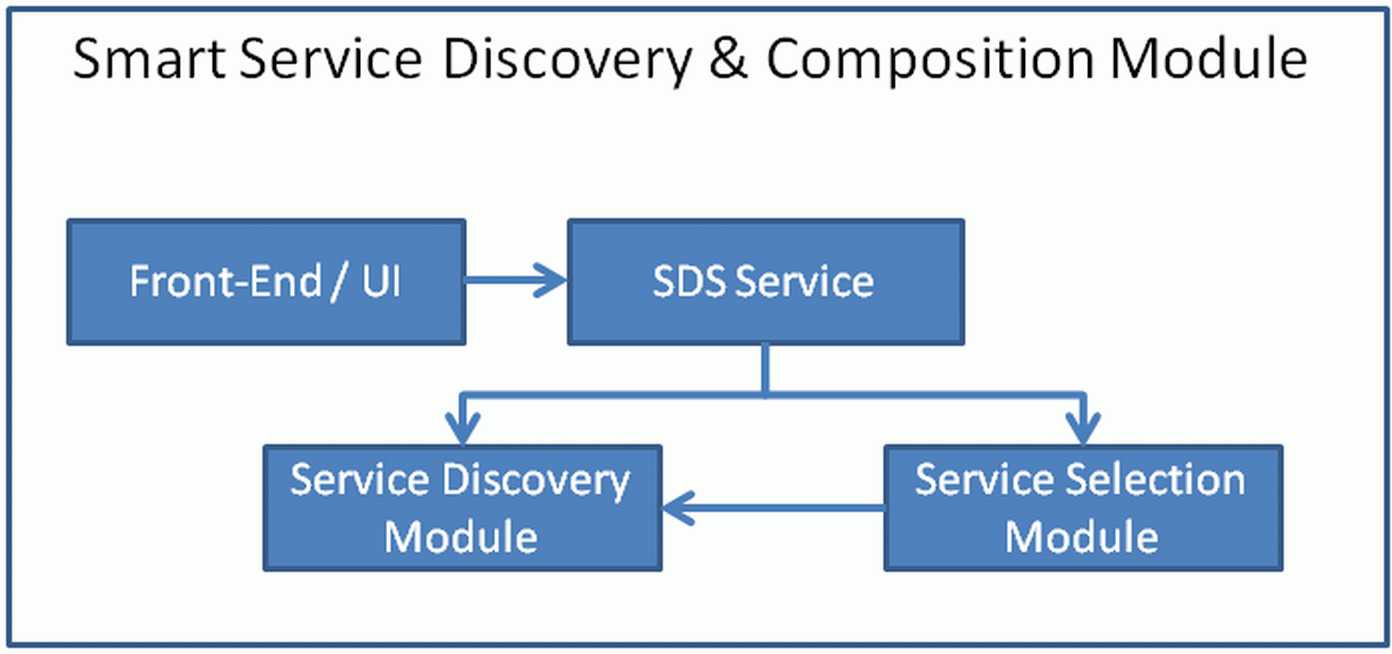

This architecture of this tool, depicted in the following figure, includes three main levels: UI, business logic and database, while it certainly embraces service-orientation. It comprises the following components at the highest level:

- The UI component offers the respective visualisation and editing means to enable the initialisation of service discovery and workflow concretisation interactions. At the background, it performs REST calls over the next component, the REST SDS service. - The REST SDS (Service Discovery and Selection) service encapsulates the whole functionality of the blueprint and enables its programmatic access via REST function calls. This functionality is offered via two different modules which focus on the two main parts of service discovery and selection. - The Service Discovery Module enables performing functional and non-functional service discovery via the supply of a combined OWL-S and OWL-Q request. - The Service Selection Module actually realises the service-based workflow concretisation functionality. As service discovery is a prerequisite for service-based workflow concretisation, this module is actually able to invoke the Service Discovery Module in order to obtain all possible (service) alternatives for each BPaaS workflow task.

This high-level architecture is further elaborated by concentrating on the internal architecture of the latter two modules. The latter architecture is also able to unveil the third level which was not apparent in the high-level architecture.

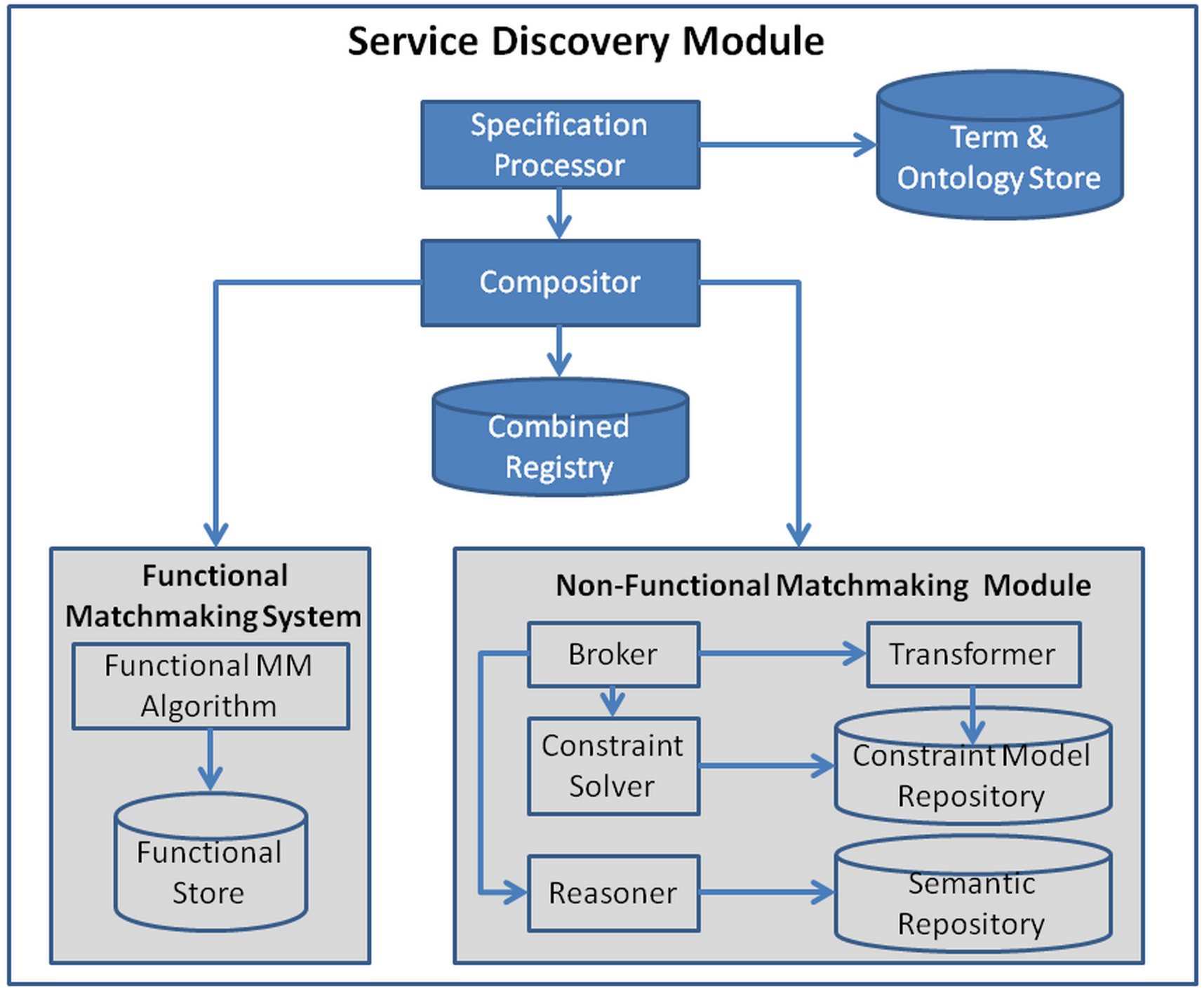

The architecture of the Service Discovery Module is depicted in the following figure. As it can be seen, the Specification Processor is the component which takes the user/broker input and attempts to validate it both syntactically, semantically as well as in a constraint-based manner. Once user input is validated, its non-functional part is also aligned according to a specific non-functional alignment algorithm operating over a (non-functional) term store. Both the aligned non-functional specification and the functional one are passed to the Compositor which then issues them to the respective aspect-specific matchmakers, which are named as Functional Matchmaking Module and NonFunctional Matchmaking Module. Depending on the type of functionality requested, different actions are actually performed. In case of service registration / updating / deletion, the corresponding matchmaker performs the required update and then informs the Compositor for its main result. For service registration and deletion, transactionality is guaranteed by the latter component. This means that if for some reason, an aspect-specific registration / deletion fails, then the other aspect-specific registration is also rolled back to maintain a consistent state in the whole system. To assist in transactionality enforcement, a Combined Registry can be consulted and managed by the Compositor which enables storing the mappings between functional and non-functional specifications of services.

In case of service matchmaking, the Compositor realises the service discovery's composition logic by being able to execute different orchestration algorithms, such as sequential and parallel ones. In any case, the facilities of the respective matchmaking modules are exploited in order to support the required aspect-specific discovery functionality. Once both aspect-specific discovery results are obtained, then these are relayed back from the Compositor to the Specification Processor.

The Functional Matchmaking Module is a slight modification of the service matchmaker that has been developed in the Alive European project. Apart from being semantic, this matchmaker employs a smart structure and respective dynamic encoding scheme for domain ontologies that enables discovering concept ancestors or descendants in O(1) time, thus greatly speeding up the functional service matchmaking time where such ontology-based functions are highly needed and used. For simplification reasons, the internal architecture of this module can be seen as a combination of two main components: (a) the functional matchmaking algorithm and (b) the Functional Store as the storage medium for the functional specification of services.

The Non-Functional Matchmaking Module is an agglomeration of different non-functional matchmaking algorithms which are able to exploit two different techniques to perform the matchmaking: (a) constraint programming / solving and (b) ontology subsumption. The respective internal architecture of this module can be seen in the following figure. As it can be seen, it comprises 6 main components. The Broker is responsible for orchestrating the appropriate actions towards conducting the service matchmaking. It actually realises internally the service matchmaking logic and exploits the facilities of other components in order to realise part of the needed functionality. In particular, in case of constraint-based service matchmaking, the respective service request needs to be transformed into a constraint (satisfaction) problem (CSP) via the Transformer. This CSP is then exploited in order to realise a corresponding matchmaking metric which also takes into account the CSP model of the corresponding non-functional service specification by exploiting the facilities of the Constraint Solver. In case of ontology-based service matchmaking, an Ontology Reasoner is exploited in order to infer the subsumption hierarchy between a pair of service request and offer or between the service request and all the non-functional offers, depending on the respective ontology-based non-functional service matchmaking algorithm to be exploited.

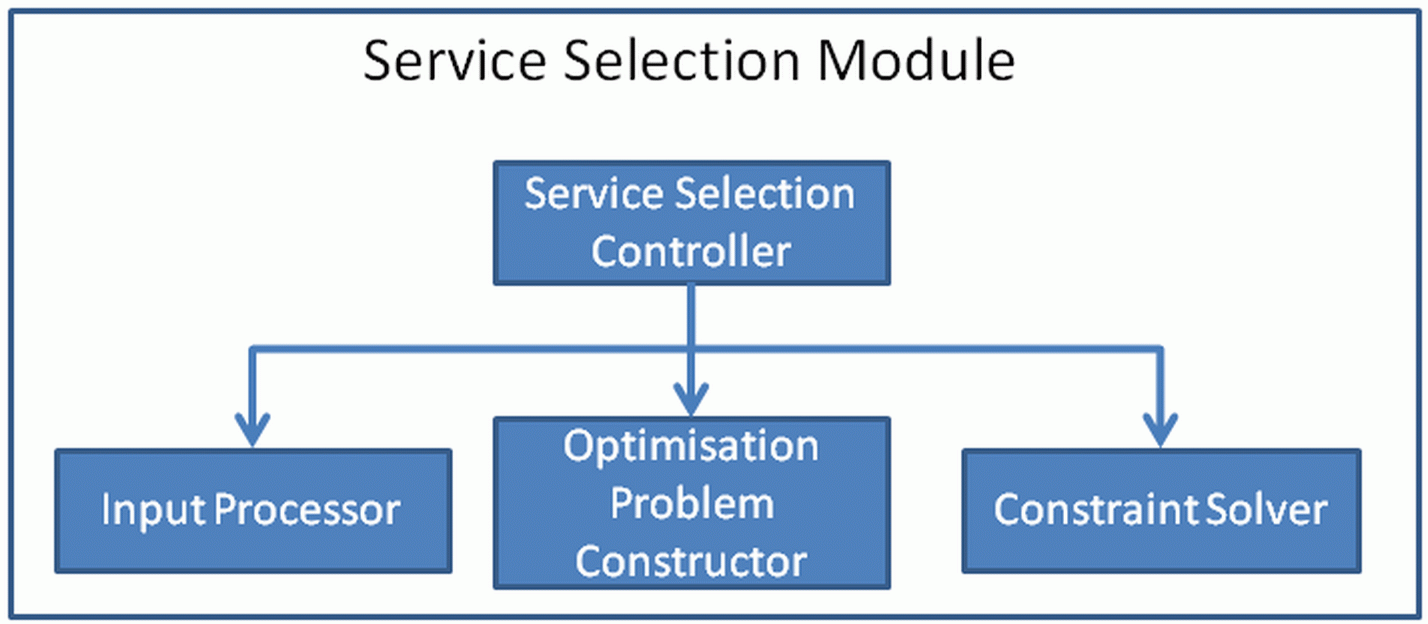

The Service Selection Module follows a simplified architecture, depicted in the following figure, which comprises three main components: (a) the Service Selection Controller is responsible for the orchestration of the components involved in the service-based workflow concretisation. This component first invokes the Input Processor in order to construct the appropriate input for the actual optimisation problem construction. This means that based on the respective semantically-annotated service-based BPaaS workflow, the Input Processor performs service discovery for all the tasks involved according to both task-based functional and non-functional broker requirements provided in the form of annotations. This also means that this component takes into account the BPaaS CAMEL model in order to discover those IaaS offerings which satisfy the respective requirements posed for the BPaaS internal service components. Once all input has been gathered and extended, the Service Selection Controller invokes the Optimisation Problem Constructor with it in order to transform it into a constraint optimisation problem. The later problem is finally sent by the Service Selection Controller to the Constraint Solver for solving.

The source code is publicly available at GitLab.