Semantic Knowledge Base

Summary

The Semantic Knowledge Base (Semantic KB) is a composite component in the BPaaS Evaluation Environment which is responsible for the storage and management of the semantic information collected by the Harvesting Engine. It represents a dependency point for most of the other components in the evaluation environment as all of these components need to interact with it in order to retrieve or store semantic information. The only components currently independent from the Semantic KB are those at the upper level of the evaluation environment architecture, i.e., the Hybrid Business Dashboard and the Hybrid Business Management Tool. This is due to the fact that the Semantic KB is not directly involved in the execution of a certain BPaaS analysis functionality.

This component has been realised in the form of a REST API which lies on top of an existing Triple Store named as Virtuoso. This API offers typical semantic data management functionalities, such as querying, importing, exporting, insertion, updating, deletion. In addition, the use of a Triple Store enables the exploration of other facilities that can be offered that are more triple-store-specific. However, this is not actually required as the REST API should be used instead which enables decoupling from any technical peculiarities of the corresponding triple store exploited thus enabling the future interchange of that triple store implementation with another one.

The main functionalities offered by the REST API can be summarised as follows:

- SPARQL query issuing -- main functionality that has been widely used by all analysis engines

- semantic information importing -- various formats are supported for this but Turtle is the one mostly recommended for performance reasons

- semantic information insertion/updating/deletion -- triples can be inserted, inserted or deleted at any time point by exploiting the Sesame/rdf4j interface bridge of Virtuoso via issuing SPARUL statements

- semantic information exporting -- semantic information exporting is also supported in different forms - similarly to the case of importing, Turtle is recommended also for file size reasons

It should be noted that for administration purposes, Virtuoso also offers the Virtuoso Conductor which is a web interface that enables to manage in a web-based manner your triple store implementation. This allows for the creation and deletion of whole graphs, the querying of the semantic information, the importing of such information and its updating. As such, it can be a complement to the API offered which can enable an administrator to intervene at any time point and browse, inspect and adjust the content of the Triple Store, if needed.

This component can be easily replaced by another component developed externally with respect to the CloudSocket organisation. The only requirement for such a replacement is that the same REST interface should be realised as it is currently exploited by many other components in the BPaaS Evaluation Environment architecture (including the Harvesting Engine, the Process Mining Engine, the Deployment Discovery Engine and the Conceptual Analytics Engine).

The following table indicates the details of the component.

| Type of ownership | Update |

| Original tool | Virtuoso Triple Store |

| Planned OS license | Mozilla Public Licence 2.0. |

| Reference community | ADOxx Community. |

Consist of

- Semantic KB Service (REST API exposing the core functionality of the Semantic KB)

Depends on

- This component is entirely independent of the others in the BPaaS Evaluation Environment Architecture

Component responsible

| Developer | Company | |

|---|---|---|

| Kyriakos Kritikos | kritikos@ics.forth.gr | FORTH |

Architecture Design

The Semantic KB is coloured in orange in the overall BPaaS Evaluation Environment architecture depicted in the figure below. As it can be seen, it is invoked by all the engines involved in this environment.

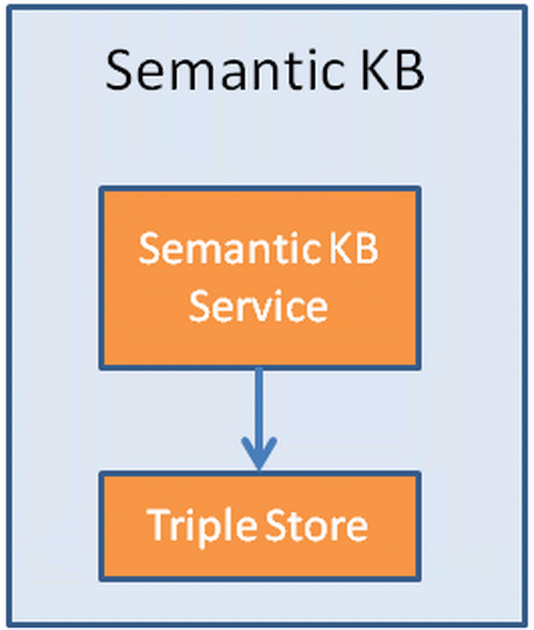

The final, internal architecture of the Semantic KB, which is depicted below, comprises the following 2 main components:

- Semantic KB Service: A REST service which encapsulates the semantic data management functionality exposed by the Semantic KB in the form of a set of REST API methods.

- Triple Store: an internal triple store which enables the semantic data management functionality by providing the right facilities for its realisation. This maps to using the Sesame interface bridge in order to be able to exploit Sesame as the implementation of the Semantic KB Service which can accordingly connect to the triple store in order to execute the semantic data management functionality requested.

Architecture of the Semantic KB. |

Installation Manual

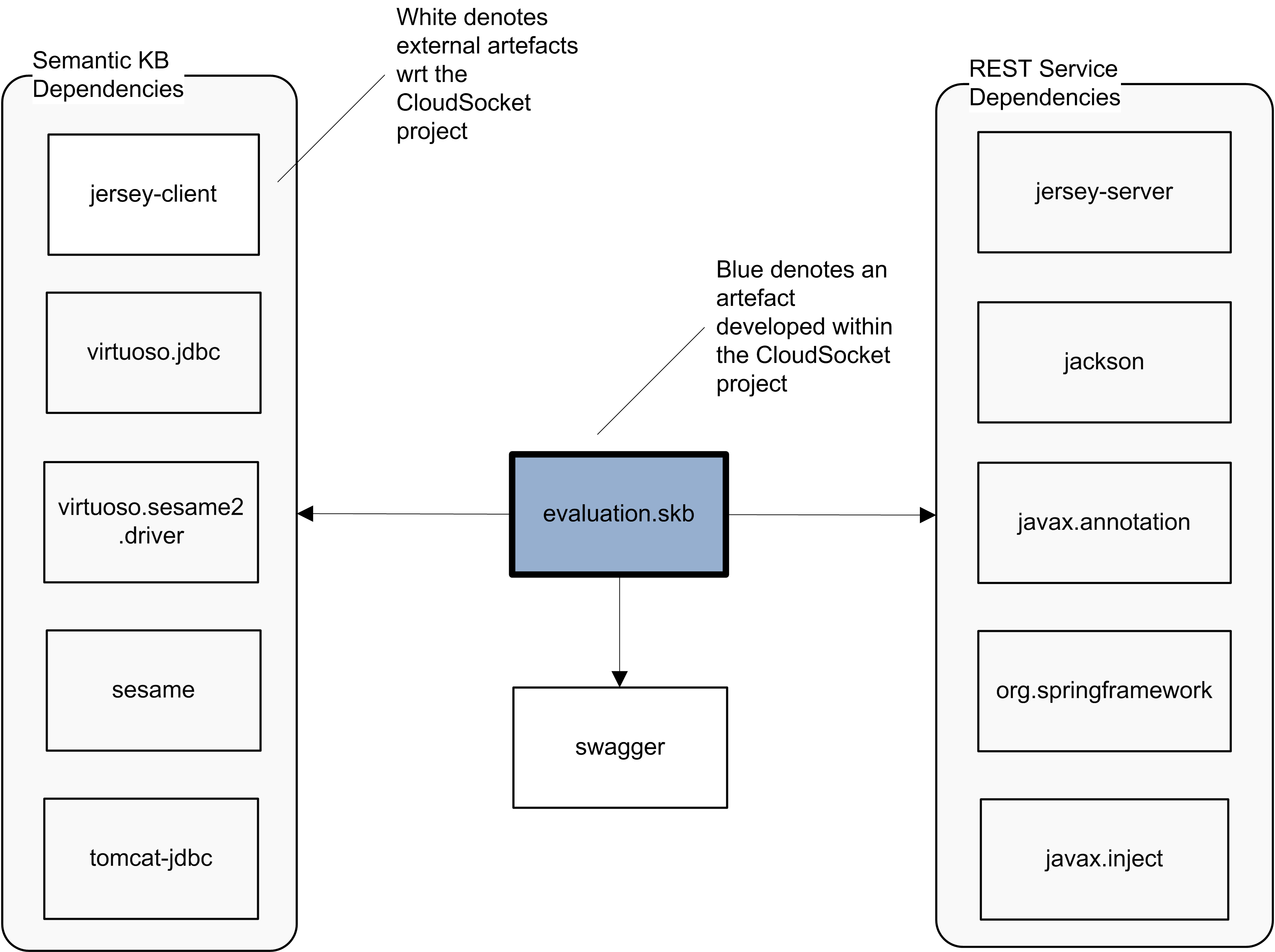

The component has been implemented purely in java. It depends on other external components/frameworks like Spring, swagger, jersey and sesame/rdf4j. The following figure explicates the sole artefact generated mapping to this component as well as its dependencies on other artefacts (at a coarse-grained level to simplify the figure).

Artifact dependencies for the Semantic KB. |

Maven has been exploited as the main build automation tool, prescribing how the component/artefact can be built and its main dependencies. Such a tool facilitates the building and automatic generation of this artefact for the different CloudSocket environments (development and production) and their configuration. A complete description of how such artefact building and generation can be performed for the different environments is given in the installation manual below.

Development

Currently, the following requirements hold for this component:

- Oracle's 1.7.x JDK or higher

- Apache tomcat 1.7 or higher

- Maven tool for code compilation and packaging

The installation procedure to be followed is the one given below:

- Download source code from https://omi-gitlab.e-technik.uni-ulm.de/cloudsocket/evaluation_skb/repository/archive.tar?ref=master (to be modified)

- Unzip code with tar (or any other tool)

- Go to the root directory of the installed code

- Change the configuration file to provide the right access information for the Virtuoso Triple Store (https://virtuoso.openlinksw.com/)

- Run:

mvn clean install

and then:mvn war:war

- Move the war file to the webapps directory of tomcat and start tomcat, if not yet started

- Test installation by entering in your browser the following URL: http://localhost:8080/skb/ (eventual endpoint)

Production

The same instructions as above hold for this component

Test Cases

You can run test cases directly from the URL (http://localhost:8080/skb in your development environment) of the REST service due to the use of swagger (http://swagger.io) which enables the execution of the API method exposed by exploiting user input provided in a form-based manner.

The loading of the API can unveil that it is up and running but its correct functioning is the one that needs to be further tested. However, this correct functioning depends on the proper functioning of the Virtuoso Triple Store. In this sense, the tester should also attempt to connect to the virtuoso conductor by supplying the corresponding URL (http://localost:8890 for a local installation or http://http://134.60.64.222:8890 for an existing remote installation). If this is successful, he/she can also check whether some functionality can be executed by, e.g., executing a simple SPARQL query. One all these steps are performed, the actual testing of the API can proceed.

The following two test cases are envisioned which map to user stories that actually involve a semantic/ontology expert (acting also as an administrator of the Semantic KB). Such a user is required to be involved here due to the respective expertise and knowledge needed for correctly carrying out the testing cases. In principle, the broker should have employed such a user in order to take care the administration of the underlying triple store.

- Linked Data Querying

This test case attempts to check whether the querying functionality of the API operates normally and returns correct results. Such results can also be compared to those returned by the native or the conductor interface of Virtuoso. Please note for such a comparison of the ordering can be different unless there are some elements in the corresponding query that impose a certain order of results presentation that has to be enforced. Please also take into account that this type of testing can also be used for checking if the harvesting and linking of semantic information is correct.

By relying on the latter observation, this test case attempts to run a specific test query of the Harvesting Engine in order to check the correctness of the harvesting and semantic linking. Thus, suppose that the user desires to run the first test query which attempts to retrieve all BPaaS workflow tasks and their input and output parameters. He/she can then browse the methods of the API and click on the one named as "ldQuery". The following screenshot indicates the upper part of the description of the method when selected:

Swagger overview of the "ldQuery" method of the Semantic KB Service. |

Next, he/she can fill in the required input parameters, i.e., the URI of the graph mapping to the broker-specific fragment, the SPARQL (test) query itself, the timeout value and the max number of rows to be returned. As the user is not impatient, he/she does not provide a value for the "timeout" parameter and desires to obtain all results so lets the default value of the "maxRows" input parameter to apply. Once the filling of the parameters is completed, then he/she can press the "Try it out!" button.

Finally, he/she will be able to see the respective curl command issued, the request URL, the corresponding results, as well as the response status and headers as indicated in the following figure:

Result of calling the ldQuery method of the Semantic KB Service. |

- Graph Deletion

This test case attempts to check whether the updating functionality of the Semantic KB Service works correctly. It maps to the usual situation within a deployment environment that the triple store administrator desires to remove the broker-specific graphs created to initiate again the harvesting and linking of deployment, monitoring and registry information after a bug fix. Such a task can be of course executed via the Virtuoso Conductor as it enables the user-intuitive browsing and management of all the graphs created but here we need to focus on the testing of the Semantic KB Service. This means that an SPARUL statement needs to be executed via the "ldUpdate" method of this service in order to delete these graphs. The following user story applies to this test case.

Suppose that the user desires to delete the graphs of the sole broker currently serviced namely "bwcon". To this end, he/she will first browse the methods of the API and click on the one named as "ldUpdate". The following screenshot indicates the upper part of the description of the method when selected:

Swagger overview of the "ldUpdate" method of the Semantic KB Service. |

Next, he/she can fill in the required input parameters. In this case, only the SPARUL statement needs to be provided for the first parameter, the "update" one as the rest of the parameters do not need to be filled in with the value of "DROP <http://www.cloudsocket.eu/evaluation/bwcon>; DROP <http://www.cloudsocket.eu/evaluation/bwcon/kpis>; DROP <http://www.cloudsocket.eu/evaluation/bwcon/history>" (these are the graphs mapping to the "bwcon" broker). Once the filling of the parameters is completed, then he/she can press the "Try it out!" button.

Finally, he/she will be able to see the respective curl command issued, the request URL, the corresponding results, as well as the response status and headers as indicated in the following figure:

Result of calling the ldQuery method of the Semantic KB Service. |

User Manual

API Specification

The reader should refer to the Swagger-based API web page (http://localhost:8080/skb for a local installation or http://134.60.64.222:8080/skb for an existing remote installation) for browsing the API on-line documentation with the capability to execute the API methods. In the following, the API methods of the Process Mining Service are analysed below:

ldQuery

This method enables to run a SPARQL query over the underlying Triple Store:

POST skb/ldQuery

The expected input format is multipart/form-data. The following input parameters are expected:

- query: denotes the SPARQL query to be issued (obligatory)

- graphURI: denotes the URI of the graph to be queried. This parameter is optional as the URI of the graph can be provided in the FROM clause of the query (optional)

- timeout: denotes the maximum amount of time to wait until the query results can be received. It defaults to the value of 0 which means unlimited (optional)

- maxRows: denotes the maximum number of query results to be returned. It defaults to the value of 0 which means all. This parameter is ignored if the query has the LIMIT SPARQL clause involved in it. This means that the query semantics is given priority over the semantics of this parameter (obligatory)

The following response codes can be returned depending on whether the API method execution was successful or not:

- 200 -- The method execution was successful

- 400 -- Wrong parameter values have been provided

- 401 -- Access denied exception

- 404 -- The resource requested was not found

- 406 -- The format requested for the method output is not supported

The output will be in various RDF forms ("application/sparql-results+xml", "application/sparql-results+json", "text/csv" and "text/tab-separated-values"). A sample of the JSON-based form for an output is given below:

{ "head": { "link": [], "vars": ["bpaas", "id"] },

"results": { "distinct": false, "ordered": true, "bindings": [

{ "bpaas": { "type": "uri", "value": "http://www.cloudsocket.eu/evaluation#BPaaS_bwcon_SendInvoiceSaaSEurope" } , "id": { "type": "typed-literal", "datatype": "http://www.w3.org/2001/XMLSchema#string", "value": "SendInvoiceSaaSEurope" }},

{ "bpaas": { "type": "uri", "value": "http://www.cloudsocket.eu/evaluation#BPaaS_bwcon_SendInvoiceSaaSWorldwide" } , "id": { "type": "typed-literal", "datatype": "http://www.w3.org/2001/XMLSchema#string", "value": "SendInvoiceSaaSWorldwide" }},

{ "bpaas": { "type": "uri", "value": "http://www.cloudsocket.eu/evaluation#BPaaS_bwcon_SendInvoiceIaaSEurope" } , "id": { "type": "typed-literal", "datatype": "http://www.w3.org/2001/XMLSchema#string", "value": "SendInvoiceIaaSEurope" }} ] }

}

ldExport

This method enables to export the contents of a graph or part of these contents in various RDF formats (RDF/XML, Turtle, CSV, etc.):

POST skb/ldExport

The expected input format is multipart/form-data. The following input parameters are expected:

- graphURI: denotes the URI of the graph whose content should be exported. If this URI is not given, then the default graph in the Triple Store is assumed (optional)

- subjURI: denotes the URI of the subject resource to be exported. If this URI is given, then only statements containing this URI as subject are exported (optional)

- predURI: denotes the URI of the predicate to be exported. If this URI is given, then only statements containing this predicate are exported (optional)

- objURI: denotes the URI of the object resource to be exported. If this URI is given, then only statements containing this URI as object are exported (optional)

The following response codes can be returned depending on whether the API method execution was successful or not:

- 200 -- The method execution was successful

- 400 -- Wrong parameter values have been provided

- 401 -- Access denied exception

- 404 -- The resource requested was not found

- 406 -- The format requested for the method output is not supported

- 500 -- An internal server error has occurred preventing the service from appropriately serving the request

The output will be in various RDF forms (RDF/XML, Turtle, NTriples, CSV, etc.). A sample of the Turtle form for an output is given below (produced by requesting to obtain only statements involving the <http://www.cloudsocket.eu/evaluation#allocation predicate for the bwcon broker):

@prefix : <http://www.cloudsocket.eu/evaluation#> . :BPaaS_SendInvoice :allocation :Workflow_SendInvoice_Allocation2, :Workflow_SendInvoice_Allocation1. :BPaaS_ChristmasSending :allocation :Workflow_ChristmasSending_Allocation2, :Workflow_ChristmasSending_Allocation1.

ldImport

This method enables to import a linked data file which can be either put inside the request body or can be downloaded from a specific URL given as input:

POST skb/ldImport

The expected input format is either "application/rdf+xml" or "text/rdf+n3" or "text/turtle" for the inline request while just query parameters are involved in the main body. The following input parameters are expected:

- url: denotes the URL from which the linked data content can be downloaded. It is optional as there is also the option to provide this content inline in the request (optional)

- uri: denotes the URI of the graph that will be mapped to the imported content. Absence of this parameter signifies that the imported content will be inserted in the default graph of the Triple Store (optional)

- format: denotes the format of the content to be imported. Only required in case that this content is to be downloaded from the given URL (optional)

- blocking: indicates whether the request should be blocked waiting for the importing to end or not. In the latter case, the method returns an id which can be used to query the status of the import by calling the "importStatus" method (optional)

The following response codes can be returned depending on whether the API method execution was successful or not:

- 200 -- The method execution was successful

- 400 -- Wrong parameter values have been provided

- 401 -- Access denied exception

- 404 -- The resource requested was not found

- 406 -- The format requested for the method output is not supported

- 500 -- An internal server error has occurred preventing the service from appropriately serving the request

- 503 -- The service is not available

The output will be in either JSON or XML form. A sample of the JSON form for an output is given below (highlighting that a non-blocking request was issued for which the importing is currently running):

{

"importID": "100",

"status": "RUNNING"

}

importStatus

This method enables to obtain the status of an ongoing RDF import request:

POST skb/importStatus

The expected input format is multipart/form-data. The following input parameters are expected:

- importId: denotes the importId of an ongoing import request which should have been returned when the "ldImport" method was called in a non-blocking way (obligatory)

The following response codes can be returned depending on whether the API method execution was successful or not:

- 200 -- The method execution was successful

- 400 -- Wrong parameter values have been provided

- 401 -- Access denied exception

- 404 -- The resource requested was not found

- 500 -- An internal server error has occurred preventing the service from appropriately serving the request

The output will be in either JSON or XML form. A sample of the JSON form for an output is given below (actually almost the same as in the case of the "ldImport" method but now denoting a successful result):

{

"importID": "100",

"status": "FINISHED"

}

ldUpdate

This method enables to update the content of an existing graph in the Triple Store by issuing a SPARUL statement:

POST skb/ldUpdate

The expected input format is multipart/form-data. The following input parameters are expected:

- update: denotes the update statement in SPARUL (obligatory)

- baseURI: denotes the base URI via which relative URI statements in the SPARUL query can be resolved (optional)

- timeout: denotes the maximum amount of time that the broker can wait until the update is performed (optional)

- maxRows: indicates the maximum number of rows that can be affected from the execution of the SPARUL statement (optional)

The following response codes can be returned depending on whether the API method execution was successful or not:

- 200 -- The method execution was successful

- 400 -- Wrong parameter values have been provided

- 401 -- Access denied exception

- 500 -- An internal server error has occurred preventing the service from appropriately serving the request

A respective message is given in the response which indicates the successful outcome of the execution of the update statement:

Update was performed successfully